Bilingual speech segmentation

Relevant Publications

- Gilbert A, Lee J, Coulter K, Wolpert M, Kousaie S, Gracco V, Klein D, Titone D, Phillips N, Baum S, 2021 | Spoken Word Segmentation in First and Second Language: When ERP and Behavioral Measures Diverge

- Gilbert A, Wolpert M, Saito H, Kousaie S, Itzhak I, Baum S, 2019 | Adaptive and selective production of syllable duration and fundamental frequency as word segmentation cues by French-English bilinguals

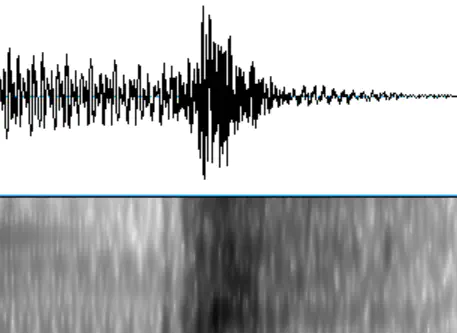

Unlike the words on this page, there are not discrete boundaries between words and phrases in natural speech. To properly segment and parse sentences, listeners use a combination of top-down (e.g., knowledge of words) and bottom-up (e.g., prosodic cues) information as signals for dividing speech into comprehensible components. Speakers must also consider this information when producing utterances to make sure their listeners understand.

As if speech segmentation were not already a difficult enough task, the acoustic cues for signalling boundaries also vary among languages! French, for instance, uses a large pitch contrast and lengthening to signal word and phrase offsets, while English relies more on lengthening and subtle differences in lexical stress. These crosslinguistic differences can lead to difficulty for second language learners to produce and comprehend appropriate segmentation cues. According to some accounts, the prosodic cues used for segmentation are perceptually wired in the brain in early life and resistant to modification by later life experience. In this way, whatever an individual’s native language is will drive their automatic segmentation strategies in whatever language they learn. Others support the idea that the brain can adapt to use appropriate non-native segmentation cues in a second language.

Our own data suggest that experience and language dominance may indeed be more important than nativeness. When we extend consideration to brain data as well, the picture becomes even more nuanced. EEG data further show that the brain can be sensitive to segmentation cues even when an individual cannot demonstrate this sensitivity behaviorally.